Clawdbot, which was recently renamed Moltbot, is defined by its creators as “the AI that actually does things.” Due to its expanded agentic capabilities and built-in autonomy, it has collected over 85,000 Github stars and has been forked over 11500 times already, all in about a week.

It can browse the web, summarize PDFs, schedule calendar entries, shop for you, read and write files, and send emails on your behalf. It already has integrations with several widely used messaging and mailing applications from WhatsApp to Telegram. It can take screenshots and analyze and control your desktop applications. But the key is that it has persistent memory. It can remember interactions from weeks ago, even months. Due to this, it has the ability to be an always available personal AI assistant that works.

Moltbot is Powerful, but is it Secure?

Moltbot feels like a glimpse into the science fiction AI characters we grew up watching at the movies. For an individual user, it can feel transformative. For it to function as designed, it needs access to your root files, to authentication credentials, both passwords and API secrets, your browser history and cookies, and all files and folders on your system. You can trigger its actions by sending a message on WhatsApp or any other messaging app and it will continue working on your laptop until it achieves the said task.

But what is cool isn’t necessarily secure. In the case of autonomous agents, security and safety cannot be afterthoughts.

Security In the Context of Usage

Understanding the attack surface in the context of how Moltbot is used is important. Let’s look at some use cases for an autonomous assistant and evaluate the associated risks.

Scenario 1: Research a topic and build summarized social media content

Moltbot can search the web and ingest search results into your terminal (or IDE, wherever you are operating Moltbot).

Risk: Some of these web results used for the research can have indirect prompt injection attacks hidden in the HTML payload. Depending on the attack objectives, Moltbot can execute malicious commands, read secrets and publish the information in the form of social media content with the confidential data built in, all without a human-in-the-loop check.

Scenario 2: Read my Telegram messages and send me action items

Moltbot can access your Telegram account because it has your passwords and can read everything that exists.

Risk: Malicious links from unknown senders will be treated with the same level of security as a message from family. Attack payloads can be hidden inside a “Good morning” message forwarded on WhatsApp or Signal. In order to do its job well, the agent has to gain access to the decrypted message, so even the more secure messaging channels will be vulnerable with this level of autonomy. But that’s not the end game. Moltbot has persistent memory, which means the malicious instructions hidden in a forwarded message are now available in its context even after a week. This exposes your system to a dangerous delayed multi-turn attack chain, which most system guardrails do not have the capability to detect and block.

Scenario 3: Use a hosted Moltbot skill for yourself

Due to the increased autonomy, and the prevailing sentiment around democratizing the use of open source AI, several developers are hosting their Moltbot skills. They do so with a positive mindset of sharing the solution so that the users next in line do not have to spend time figuring things out. It increases access and speeds up development.

Risk: There will be a mix of skills hosted around the world. These skills will be onboarded onto your assistant without any context filtering or human-in-the-loop checks. Malicious instructions hidden inside the descriptions or code will get added to the assistant’s memory. These commands can get executed and steal secrets, send private conversations, even steal business critical data.

Moltbot does not maintain enforceable trust boundaries between untrusted inputs (web content, messages, third-party skills) and high-privilege reasoning or tool invocation. As a result, externally sourced content can directly influence planning and execution without policy mediation. Moreover, the Moltbot attack surface rises more due to the excessive agency built into its architecture. It needs the agency to be a helpful assistant, but it expands the so called “lethal trifecta of autonomous agents,” making it a risky experiment.

Expanding the “Lethal Trifecta” with a fourth capability

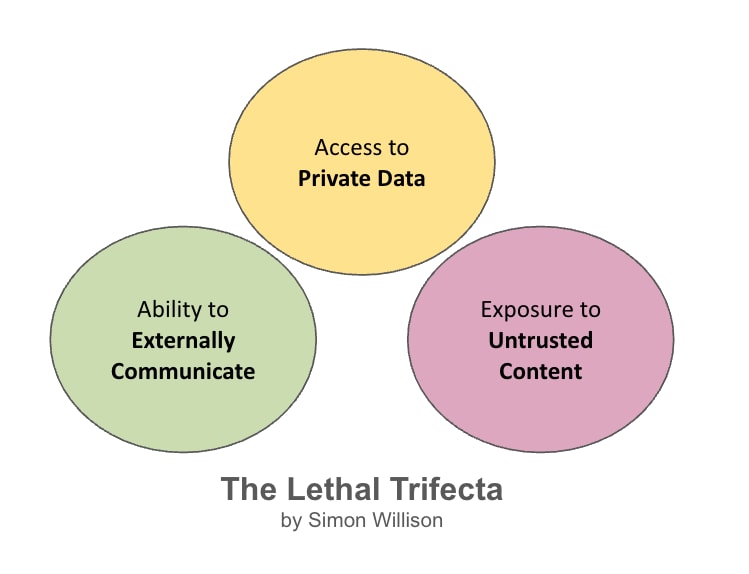

Simon Willison coined the term the Lethal Trifecta for AI Agents in July 2025. He claims that AI agents are, by design, vulnerable since they form an intersection of three capabilities:

- Access to Private Data (credentials, personal information, business data)

- Exposure to Untrusted Content (web, messages, third-party integrations)

- Ability to Externally Communicate (send messages, make API calls, execute commands)

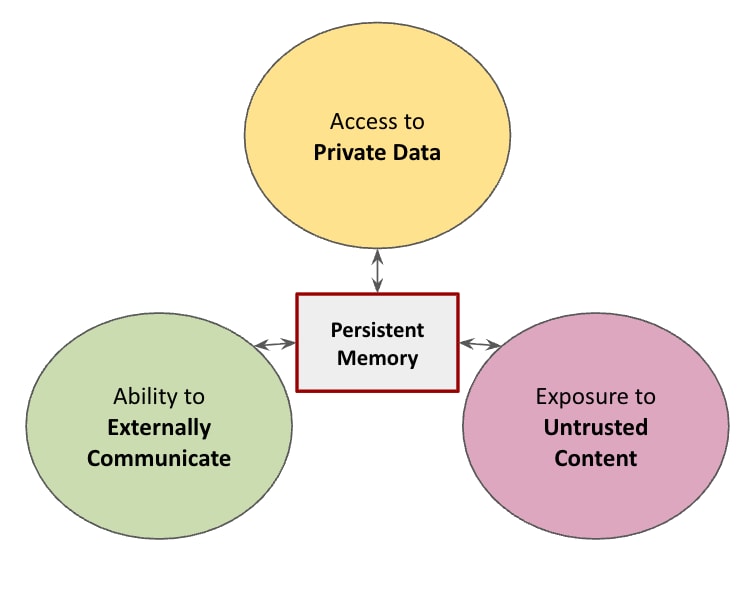

But what if there’s a fourth capability that expands this attack surface and makes it easier to attack your AI agent? The rapid surge of popularity with Moltbot brought a new capability that’s desired by users of autonomous agents: persistent memory.

With persistent memory, attacks are no longer just point-in-time exploits. They become stateful, delayed-execution attacks.

Persistent memory acts as an accelerant, amplifying the risks highlighted by the lethal trifecta.

Malicious payloads no longer need to trigger immediate execution on delivery. Instead, they can be fragmented, untrusted inputs that appear benign in isolation, are written into long-term agent memory, and later assembled into an executable set of instructions. This enables time-shifted prompt injection, memory poisoning, and logic bomb–style activation, where the exploit is created at ingestion but detonates only when the agent’s internal state, goals, or tool availability align.

Mapping Moltbot Vulnerabilities to the OWASP Top 10 for Agents

With increased agency and near-absent governance protocols, Moltbot is susceptible to a full spectrum failure on the OWASP Top 10 for Agentic Applications. In the table below, we map Moltbot’s vulnerabilities to the OWASP top 10 framework.

| OWASP Agent Risk | Moltbot Implementation |

| A01: Prompt Injection (Direct & Indirect) | Web search results, messages, third-party skills inject instructions that the agent executes. |

| A02: Insecure Agent Tool Invocation | Tools (bash, file I/O, email, messaging) are invoked based on reasoning that includes untrusted memory sources. |

| A03: Excessive Agent Autonomy | Single agents have filesystem root access, credential access, and network communication, with no privilege boundaries or approval gates. |

| A04: Missing Human-in-the-Loop Controls | No approval required for destructive operations (rm -rf, credential usage, external data transmission) even when influenced by old, untrusted memory. |

| A05: Agent Memory Poisoning | All memory is undifferentiated by source. Web scrapes, user commands, and third-party skill outputs are stored identically with no trust levels or expiration. |

| A06: Insecure Third-Party Integrations | Third-party "skills" run with full agent privileges and can write directly to persistent memory without sandboxing. |

| A07: Insufficient Privilege Separation | Single agent handles untrusted input ingestion AND high-privilege action execution with shared memory access. |

| A08: Supply Chain Model Risk | Agent uses upstream LLM without validation of fine-tuning data or safety alignment. |

| A09: Unbounded Agent-to-Agent Actions | Moltbot operates as a single monolithic agent, but future multi-agent versions could enable unconstrained agent communication. |

| A10: Lack of Runtime Monitoring & Guardrails | No policy enforcement layer between memory retrieval → reasoning → tool invocation. No anomaly detection on memory access patterns or temporal causation tracking. |

Moltbot is an unbounded attack surface with access to your credentials.

Persistent memory is a must-have capability for future AI assistants. Artificial General Intelligence (AGI) aims to achieve human-level intelligence across time, not limited to a single day or a session. Humans reason through life using accumulated experience, selective recall and learned abstractions. This continuity of state allows long-term planning and coherent decision making. Persistent memory introduces a durable state across sessions, allowing an AI agent to learn and evolve over time. It is a step in the right direction to achieve AGI. But unmanaged persistent memory in an autonomous assistant is like adding gasoline to the lethal trifecta fire.

The future belongs to AI assistants that are smart and secure

Moltbot is being claimed as the closest thing to AGI. Being always on, well reasoned and efficient, it almost gives superhuman capability to its user. But this level of autonomy, if not governed, can give rise to irreversible security incidents. Even with hardening techniques on the control UI, the attack surface continues to remain unmanageable and unpredictable.

The authors’ opinion is that Moltbot is not designed to be used in an enterprise ecosystem.

Moltbot’s rise in popularity is being accompanied by important security questions around the architecture of autonomous systems. The future of AI assistants is not just about smarter agents, it’s about secure agents that can be governed and are built with an understanding of when not to act.

Read the OWASP Agentic AI Survival Guide to understand how to secure against known agentic threats.