Understanding your cloud perimeter is critical for security teams, as it defines the boundary between trusted and untrusted access. You can’t prevent unauthorized entry and enforce least privilege unless you know exactly what your perimeter is.

In reality, though, defining and enforcing this boundary proves challenging, and subtle configuration mistakes often lead to holes in the perimeter. Our research identified two primary classes of misconfigurations that can lead to identity-driven initial access vectors in AWS — service exposure and access by design.

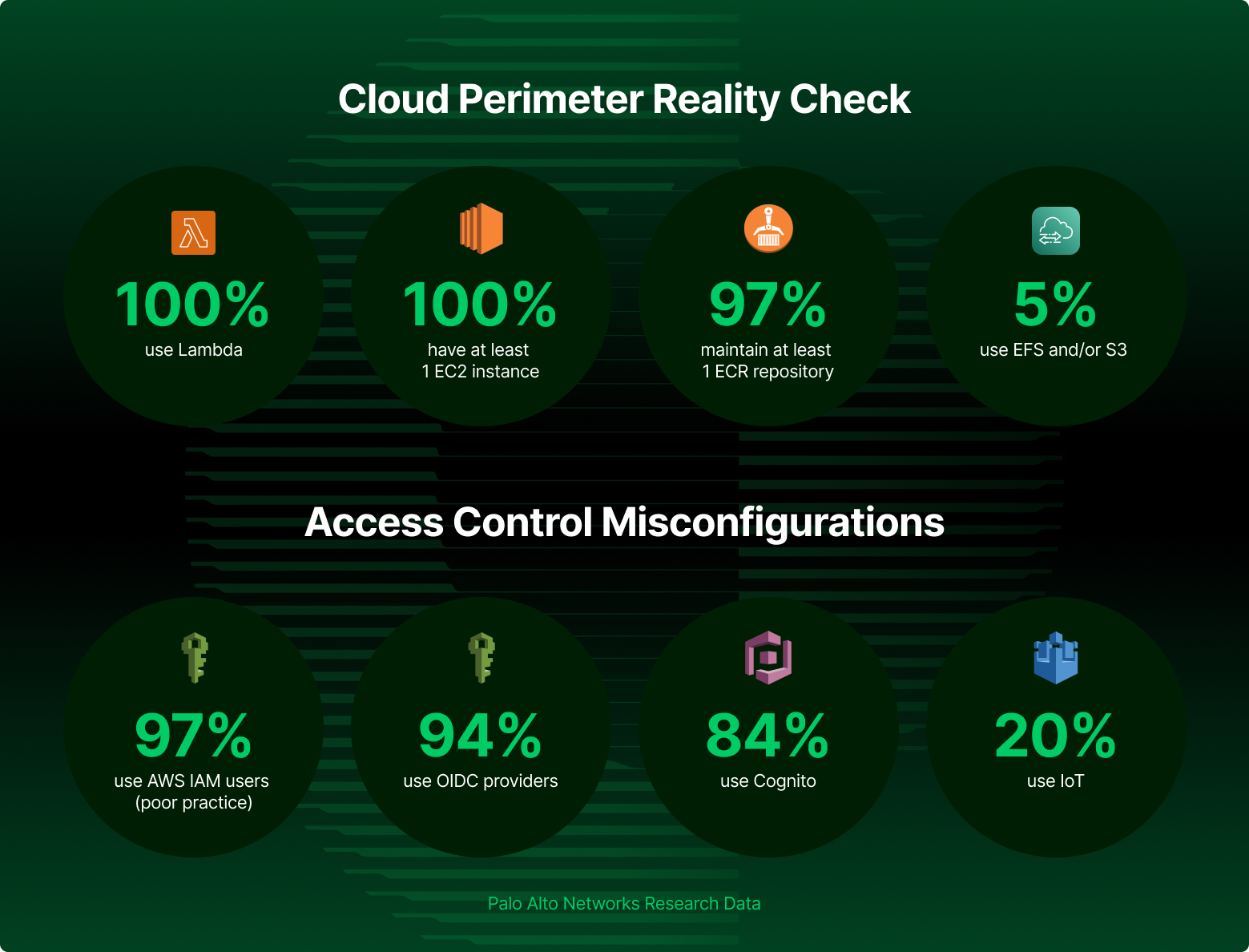

1. Service exposure relates to resource misconfigurations allowing public access, shadow resource creation and further misconfigurations that enable unintended access to AWS resources. Specifically, we will look at the following common services: Lambda, the most common service used by all organizations with at least one function that has a resource-based policy; Other popular services include EC2, ECR and DataSync, which is used by 5% of organizations.

2. Access by design relates to misconfigurations of common services that aim to provide a form of access control for AWS resources such as Cognito, IAM RolesAnywhere or IAM itself. Specifically, we will look at the following services: IAM and STS; IoT, which is used by one in five enterprises; and Cognito, which is used by 84% of organizations.

Cortex® CloudTM can help you identify and remediate these misconfigurations and alert you when they get exploited in real-time. Contact our team for more info.

Service Exposure

This section discusses misconfigurations leading to unintended access to AWS resources (i.e., service exposure). These can increase an environment’s attack surface by expanding its exposed perimeter.

Service exposure is hardly a new threat. Much work has been done on the matter, including most recently by Daniel Grzelak of Plerion (https://awseye.com/). Despite this, public assets are still problematic, as shown by the rise and adoption of attack surface management (ASM) solutions in the past few years.

As our intention isn’t to aid insomniacs by providing an alternative to counting sheep, we won’t list every single AWS service and how it can be made public. Instead, we’ll try to showcase the concept through multiple examples, which we believe constitute the most popular avenues adversaries use to gain a foothold in environments.

We also point you to a list of services that support resource-based policies, given that much of the content discussed in the following examples applies to them.

Lambda

What Is It?

Lambda is AWS’ main serverless offering that is used to run code without the need to provision and manage servers. Since Lambda is mainly event-driven, its functions are widely used as part of larger workflows. This is one of AWS’ most popular services, used in almost every environment, and as such, understanding its security landscape is quite important. The impact of publicly exposing functions varies depending on context: it can cause service disruption, provide attackers with the ability to alter workflows and information, and even grant them access to AWS resources.

What Are the Pitfalls?

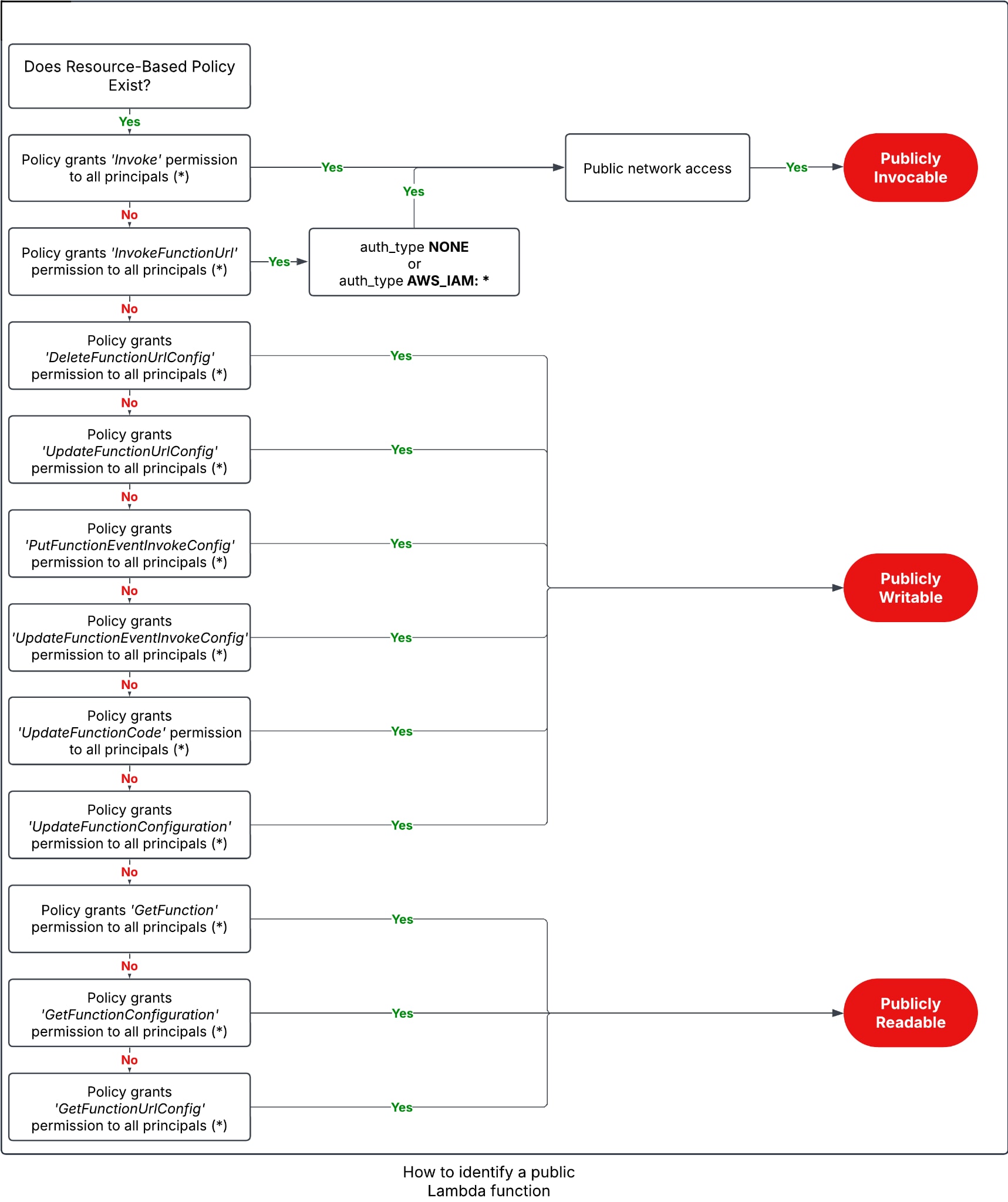

Lambda functions can be made publicly available in multiple fashions, changing the potential impact of successful exploitation.

- A publicly invocable function can be triggered, in which case the impact will depend on its original use case.

- A publicly writable function can be altered to do an adversary’s bidding, with the only limit being the function’s permissions.

- A publicly readable Lambda can allow an adversary to obtain potentially sensitive information pertaining to the function and its related data.

Whether or not a function is made public depends on two elements — authorization and reachability (network). To make a function publicly invocable, it must allow access from the public internet, which is the default configuration. However, since it must allow anyone to invoke it, it also needs a resource-based policy that allows an invoke action for any principal.

As for public readable and writable functions, the situation changes slightly. Invoke actions are performed directly against the function, and require network reachability. However, read and write actions are performed on the function and against AWS APIs, which (at least currently) can't be easily restricted to specific networks. This means that the only requirement to make a function publicly readable or writable is having a resource-based policy that enables a relevant action for any principal.

The following diagram describes the different misconfigurations that could lead to publicly exposing a Lambda function.

EC2

What Is It?

EC2, part of AWS’ compute offering, is used to “rent” compute capacity hosted by AWS. The service enables access to compute instances to host applications, while removing the overhead of managing the physical infrastructure. The impact of publicly exposing instances varies depending on context. However, in most cases it will provide some level of access to AWS resources through the instance’s attached role.

What Are the Pitfalls?

Public EC2 instances could prove to be a dangerous initial access vector. Not only does it have the potential to expose any information on the machine itself, but also it could allow adversaries to expand their foothold by utilizing the machine’s attached role should it have one. When discussing one’s identity perimeter, compute instances tend to be overlooked under the premise that they're more “traditional” perimeter-related than identity perimeter-related. However, due to widely used solutions that allow cloud compute to be granted identities for cloud access, both must be considered in threat modeling.

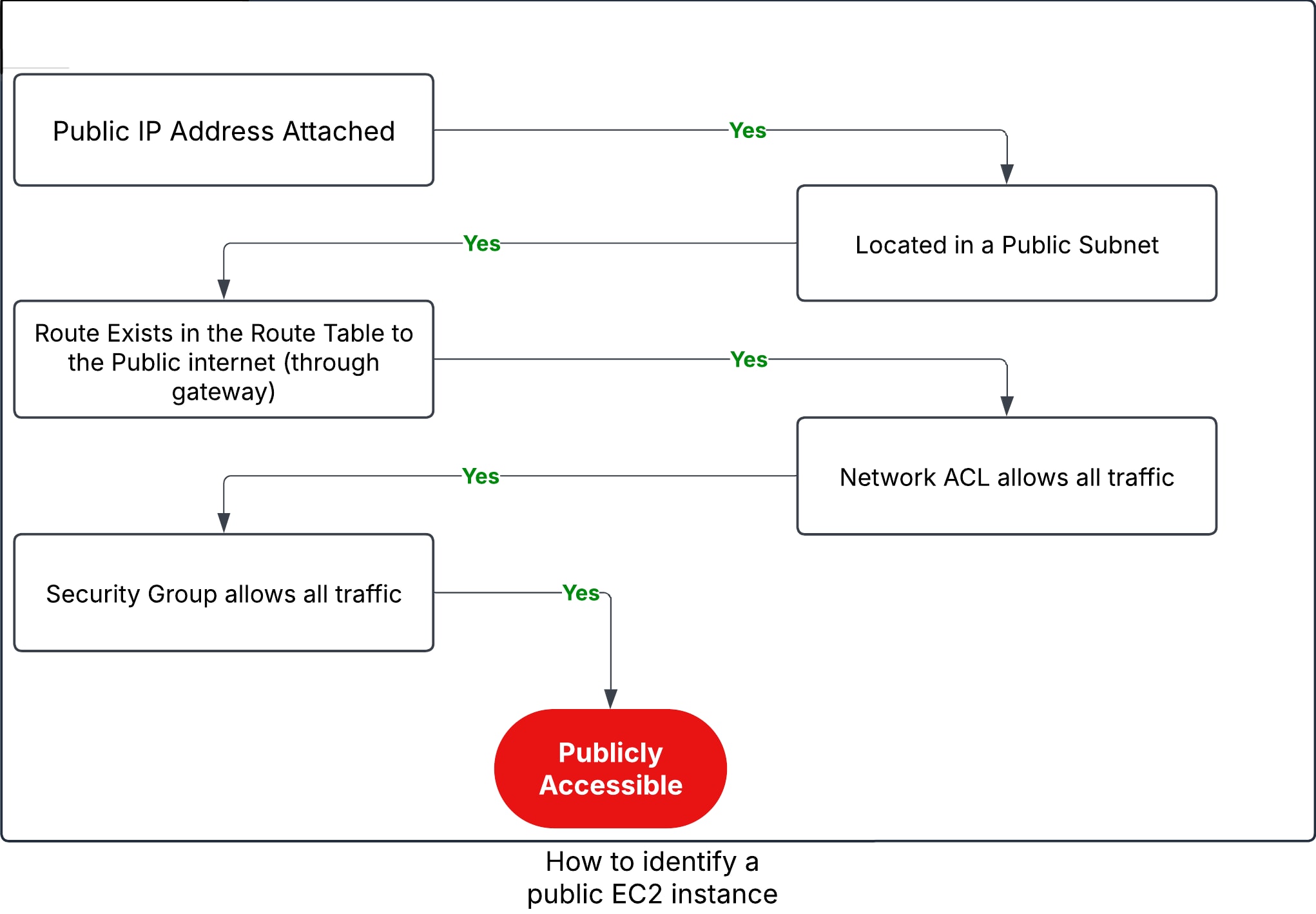

Making an EC2 instance accessible from the public internet is, in fact, not as straightforward as one would expect and less likely to be done accidentally. Doing so would require settings to be configured on multiple resources, such as the instance and a variety of network resources connected to it. The basic required components are a public IP address, a subnet with a route to the public internet (internet gateway), and security configurations that allow it (security groups, network ACLs).

Other than public network access, there could be additional constraints a malicious actor would need to handle to gain access. For example, SSH access would require obtaining a key. Note that a public subnet is configured with direct access to an internet gateway, while a private subnet is configured with a NAT gateway. Private subnet internet access, then, is dependent on the gateway configurations.

ECR

What Is It?

Elastic Container Registry (ECR) is AWS’ managed container registry. It's used to store container images that can later be shared and deployed through it. As a clarification, this section refers to the private ECR offering and not its public alternative (ECR-Public).

Repositories that enable public access serve adversaries as an initial access vector to entire organizations. The less severe cases could allow unintended access to sensitive data contained in the images. In the worst-case scenario, an existing image could be replaced by a malicious one and infect the environment’s supply chain, potentially gaining access to any machine using the infected image.

What Are the Pitfalls?

ECR is somewhat a special case, as repositories and registries can be enumerated by anyone with an AWS account. The uniqueness of a registry is its account and region. As such, given enough time, it could be possible to enumerate all public registries by attempting to perform actions on every account-region combination. The number of permutations, though, makes this approach impractical. A different approach to take is to perform enumeration at the repository, rather than registry, level. Given a targeted attack scenario, when an account ID is known, an adversary could compile a list of the most popular repository names using resources such as Docker Hub. They could then attempt a brute force attack using the account ID, AWS region list and the compiled repository names. While not a particularly efficient route, the scenario did yield multiple results when tested.

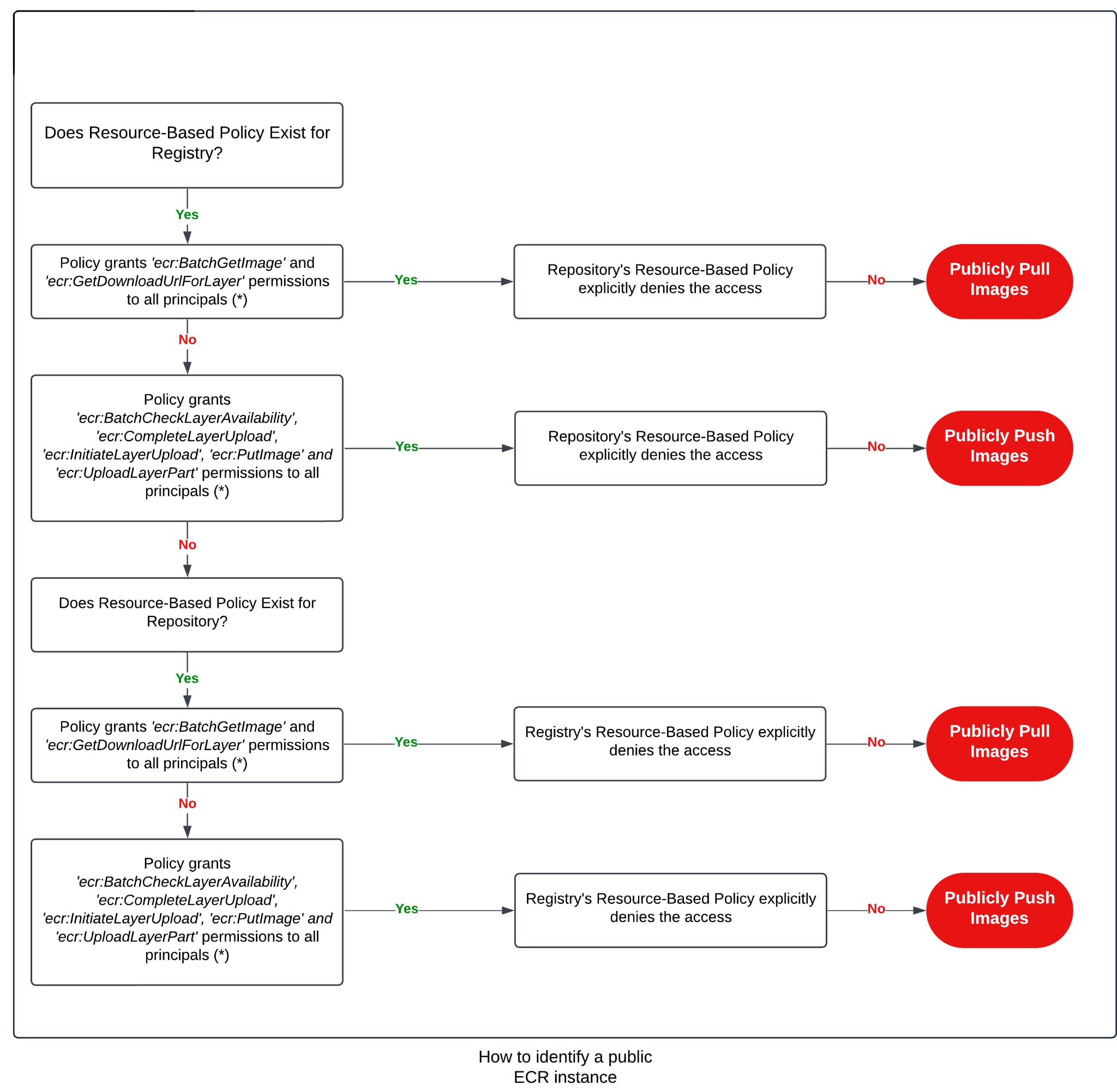

The main confusion that can occur in ECR configuration concerns permissions at the registry versus repository level. Registry permissions affect every repository within it, while repository level permissions are relevant only to the individual repository on which they were set. As in other AWS scenarios, policies in ECR are additive, meaning that allowing public access in one of the policies is enough given that no deny statement exists that contradicts the access granted.

The minimal permissions to pull an image from ECR are ecr:BatchGetImage and ecr:GetDownloadUrlForLayer. The minimal permissions to push an image to ECR are ecr:BatchCheckLayerAvailability, ecr:CompleteLayerUpload, ecr:InitiateLayerUpload, ecr:PutImage and ecr:UploadLayerPart.

Multiple avenues can be taken to safeguard your environment from public ECR scares. Rather than relying solely on repository-scoped permissions, set access at the registry level for anyone who may need access to any repository within it and explicitly deny everyone else. At that point, you can scope individual repository permissions as needed using a combination of allow and deny statements without having to worry about accidental access given to entities outside the organizations (obviously remain vigilant and grant access only to those within the organization who require it). The following diagram describes the different misconfigurations that could lead to public exposure.

DataSync

What Makes It Public?

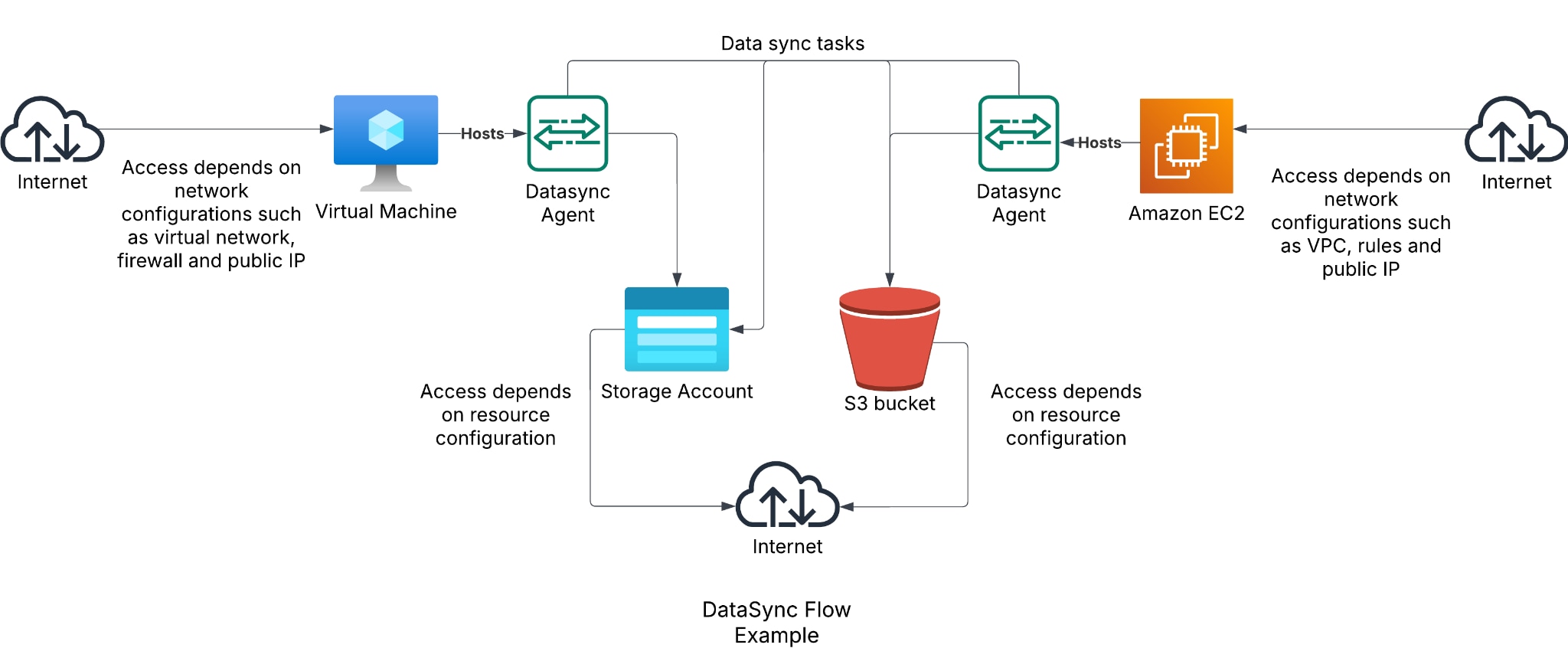

DataSync is AWS’ managed data migration service. It's used to migrate data to and from AWS, supporting locations such as AWS services (e.g., S3, EFS), Azure storage, and on-premises solutions (e.g., SMB, NFS).

DataSync locations are not resources per se, but rather a “DataSync representation” of a resource located elsewhere. As such, most misconfigurations that could result in an initial access vector don’t actually happen within the realm of DataSync. Let’s first break down the resources available in DataSync:

- Agents — To access any type of location outside AWS resources, you must first configure and deploy an agent. DataSync supports deployment of agents on VMware, KVM, Hyper-V and EC2.

- Locations — DataSync’s representation of a storage solution that acts as an endpoint for DataSync tasks.

- Tasks — DataSync’s data transfer/migration configurations.

What Are the Pitfalls?

As you can see, many options are available for configuring the service, and as such, there are many potential pitfalls. First, let’s discuss agents. When creating an agent (regardless of type), we must also choose a type of service endpoint — public, FIPS or VPC. Choosing a public endpoint would result in the information being transmitted over the public internet. In addition to this configuration, the other risks of initial access with regard to the agents lie outside of the DataSync service, and rather with the configuration of the instance on which the agent is installed. For example, if the agent is installed on an Azure Compute instance or AWS EC2 instance, access to the agent would depend on access configurations of the machine itself and the network in which it resides.

With regard to AWS EC2 instances, mapping the existing agent hosts within the environment can be done with near certainty by locating instances using an Amazon Machine Image (AMI) with a prefix of aws-datasync (amazon/aws-datasync<something>). When the agents are hosted on-premises or in other cloud provider environments, the task is a lot trickier. As such, the recommendation for agents is quite simple and relates to compute instances as a whole — make sure that no compute instance in your environment is publicly accessible, unless it EXPLICITLY needs to be, in which case-specific verification should be made on that instance to ensure it'sn’t hosting an agent.

Second, we’ll discuss locations. Once again, since the locations mapped resources do not reside within DataSync itself, the question of their accessibility is specific to the location type. As such, ensure that access to the locations used by DataSync is available explicitly only to intended targets. The final point regarding locations is the matter of abandoned resources, more specifically S3.

When using an S3 bucket as a location, we can sync data TO and FROM it (given that the permissions are granted to the task through a DataSync service role). When a bucket is deleted and recreated in a different account, the sync will continue to work (given a schedule/manual run), thus syncing to the “new” bucket. A couple of important points worth making:

- In the event of a scheduled task, after a certain number of failed attempts, the service will stop that task’s schedule. This means that an attacker relying on a scheduled task will have only a relatively short window of opportunity to exploit it — between the deletion of the original bucket and the creation of the malicious one.

- The default role created using the “autogenerate role” feature contains a “resourceaccount” condition (this needs to be changed based on the location of the bucket). If this condition exists, and then the attack is rendered mute.

The implications of a successful attack could be the leakage of sensitive information, loss of information, or planting of false information. As such, it's recommended to ensure that the “resourceaccount” condition exists for every DataSync service role.

Access by Design

In this section, we refer to misconfigurations that allow the potential abuse of services designed to enable some form of access. Some of these services, such as Cognito and IAM, are mature and fairly well-known. In fact, many of their issues have been addressed either as changes to the service itself or as AWS-released advice, best practice and default configuration changes by AWS. Still, new issues arise, both for mature and lesser-known services.

The section aims to equip readers with a holistic view of the access by design services AWS offers, providing a centralized location for users to return to when they want to check their environments for misconfigurations. As such, this section will cover “obvious” misconfigurations, as well as more recent, interesting choices.

The services included in this section are IAM and STS, Cognito and IoT (for IAM RolesAnywhere view https://unit42.paloaltonetworks.com/aws-roles-anywhere/).

IAM and STS

AWS Identity and Access Management (IAM) is AWS’ service used to control access to AWS resources. It does so using policies, roles, users, security credentials, and more. Over the years, IAM has been tested rigorously and has continuously been improved to ensure that secure access control is available to AWS customers. However, new issues still arise, and many known pitfalls still tend to be missed or overlooked, thereby subjecting environments to known initial-access vectors.

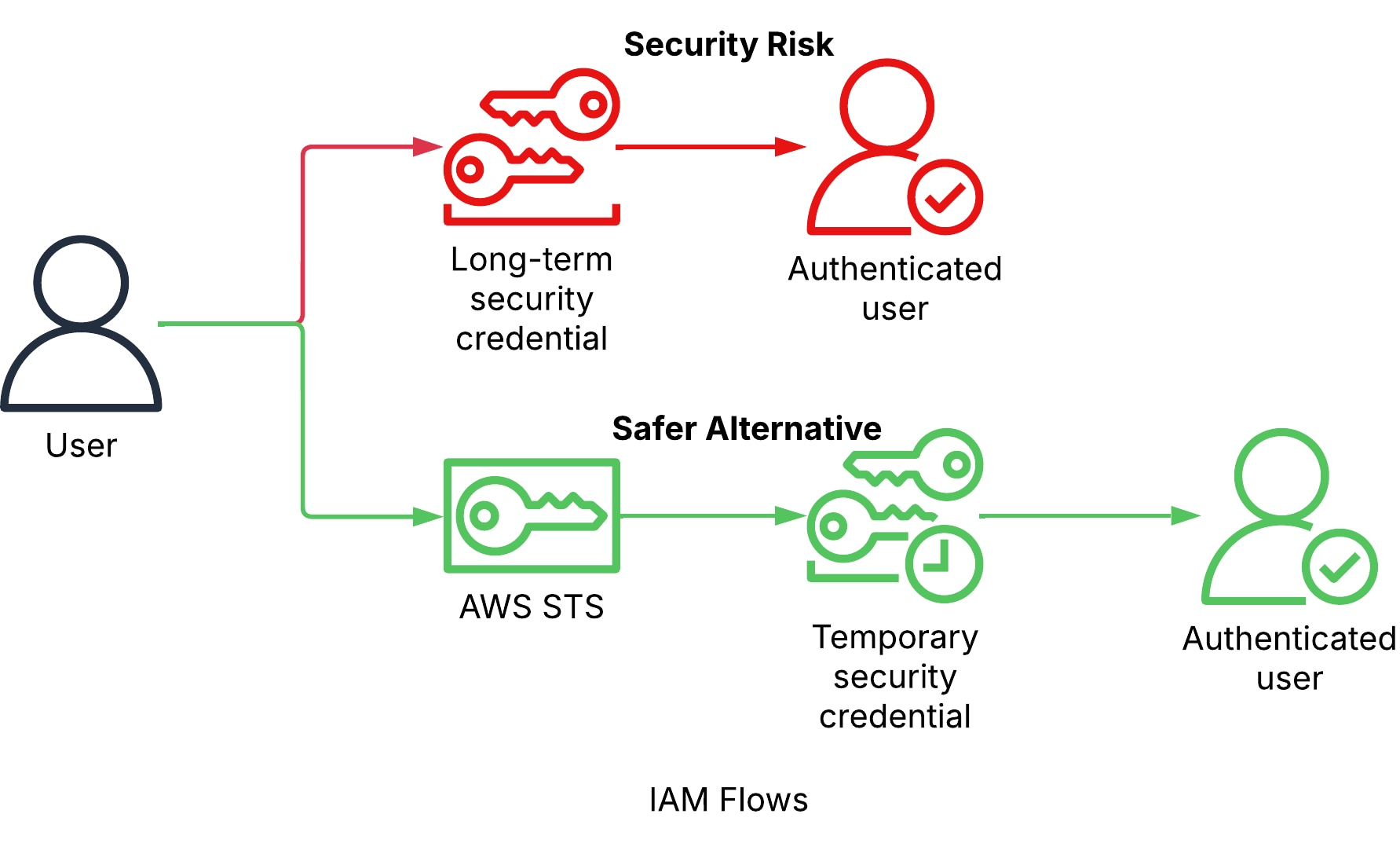

Our research shows that 97% of customers have AWS users in their cloud accounts. Security best practices recommend using roles and temporary credentials as opposed to IAM users and access keys.

In this section, we will briefly describe these common pitfalls and how to avoid them. Note that while overprivileged entities represent a severe issue that must be addressed, this blog is focused on initial access. As such, rather than concentrating on permissions granted to entities, we will look at the access to these entities.

We’ll start by looking at IAM users. While they're rarely the recommended method to provide environment access (as opposed to SSO and role assumption for short-lived credentials, for example), they're still used in many environments. The main pitfall here regards the use of long-lived credentials (i.e., access keys). When credentials are compromised, short-lived credentials leave a small window for abuse, as opposed to long-lived credentials that could allow attackers extended access to the environment. Additionally, the danger of credentials having been “forgotten” somewhere (such as committed to a codebase) is heightened due to this issue and related security hygiene oversights.

Next, we’ll look at roles. AWS IAM Roles are identities with permissions that can be assumed using the STS (Security Token Service) by any number of entities that require it, based on their trust policy. As opposed to users, roles are not associated with long-term credentials. Instead, upon assumption, the entity is provided with short-lived security credentials by STS.

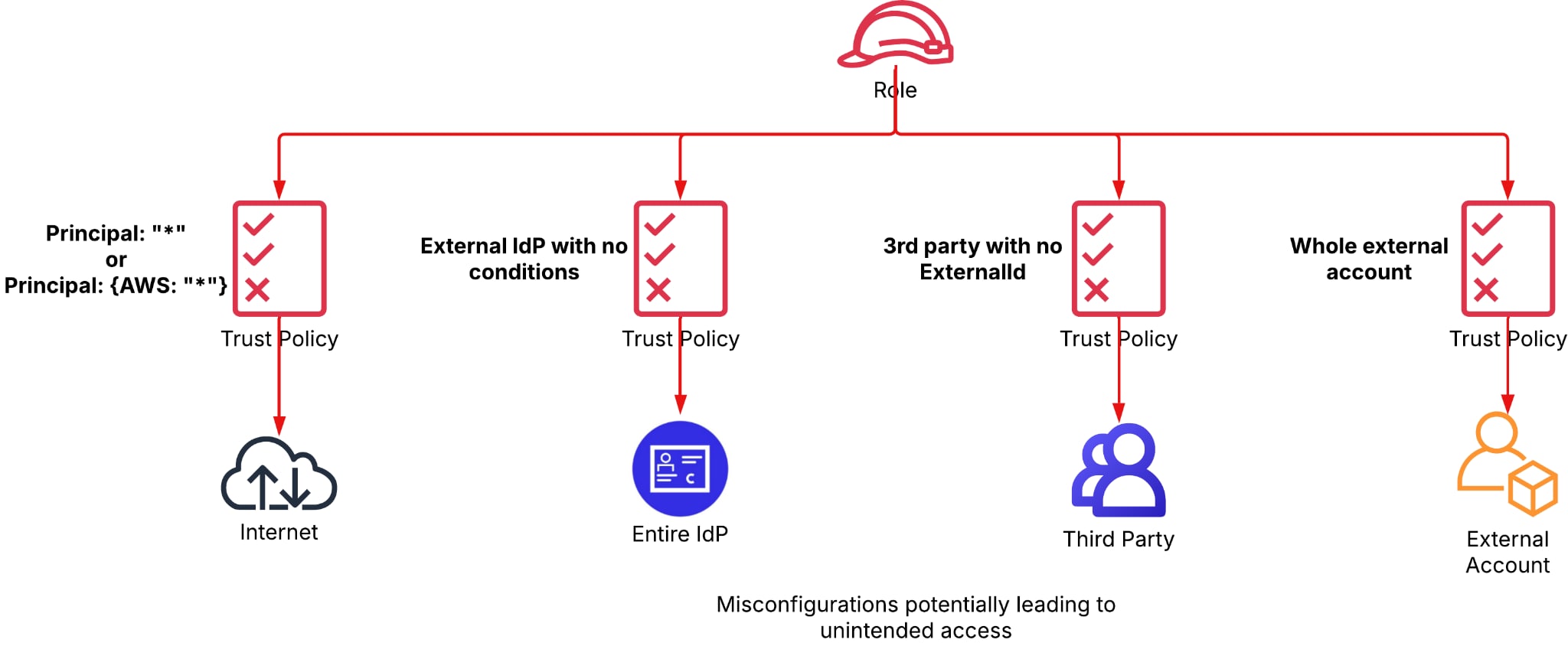

When examining the intersection of roles and initial access, what we find is the role’s trust policy. A trust policy is in the form of a JSON document and determines who can and can’t assume the role. It's a form of the previously discussed resource-based policy, specific to roles. The supported principals that can be granted access are users, roles, accounts and external identity providers (IdP).

Allowing public role assumption presents an initial access vector to the environment, with the potential impact being determined by the role’s permissions. The easiest way of doing this is “principal: *” (“*” can also be nested under the “AWS” key). However, there are other misconfigurations that could result in a public role. A (fairly) recently discovered one relates to OIDC integrations. In such cases, using the “principal” key doesn't suffice, and conditions such as “sub” and “aud” must be used to further narrow the allowed entities. These conditions are mapped to claims in the OIDC token to ensure that only intended access is allowed.

Other misconfigurations that could be dangerous but not necessarily grant public access to the role include enabling access to a third-party role without requiring ExternalId and unrestricted cross-account access, which exposes the role to potentially unknown risks, depending on the foreign account’s configurations. Lastly, a service can be granted access to a role. While this configuration only grants access to resources of the specified service deployed within the role’s account, if one of the resources allows public access (e.g., a public Lambda function) the role can be accessed publicly.

Another potential avenue for initial access within the IAM service is IAM Roles Anywhere. For information regarding its common pitfalls, please refer to our blog at https://unit42.paloaltonetworks.com/aws-roles-anywhere/.

IoT

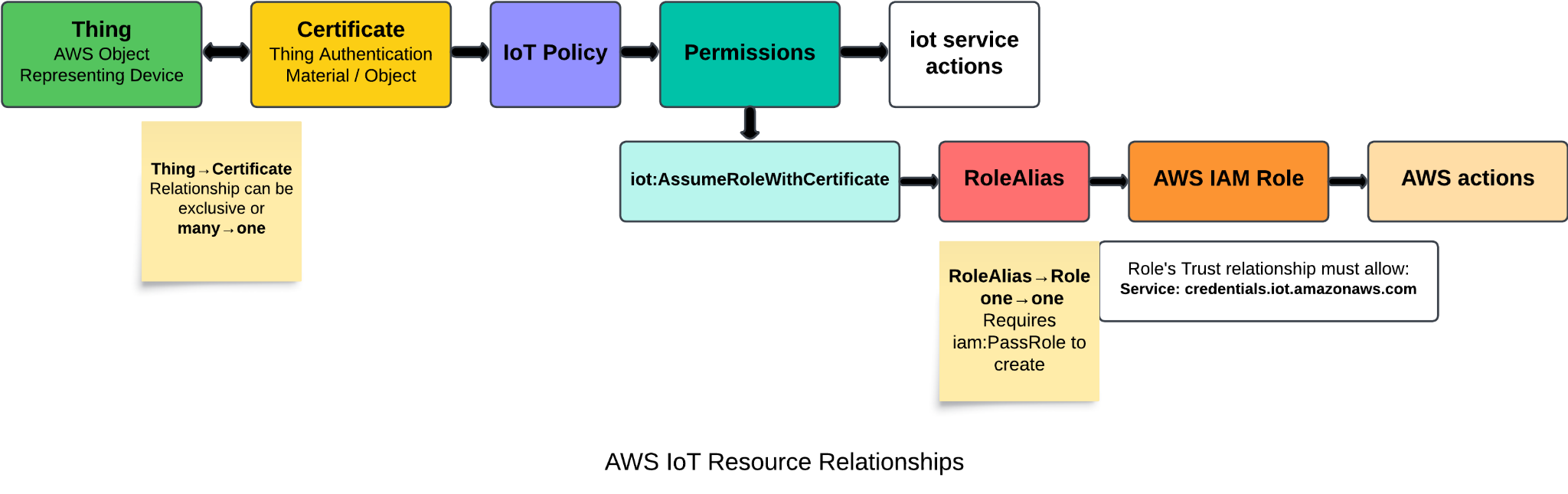

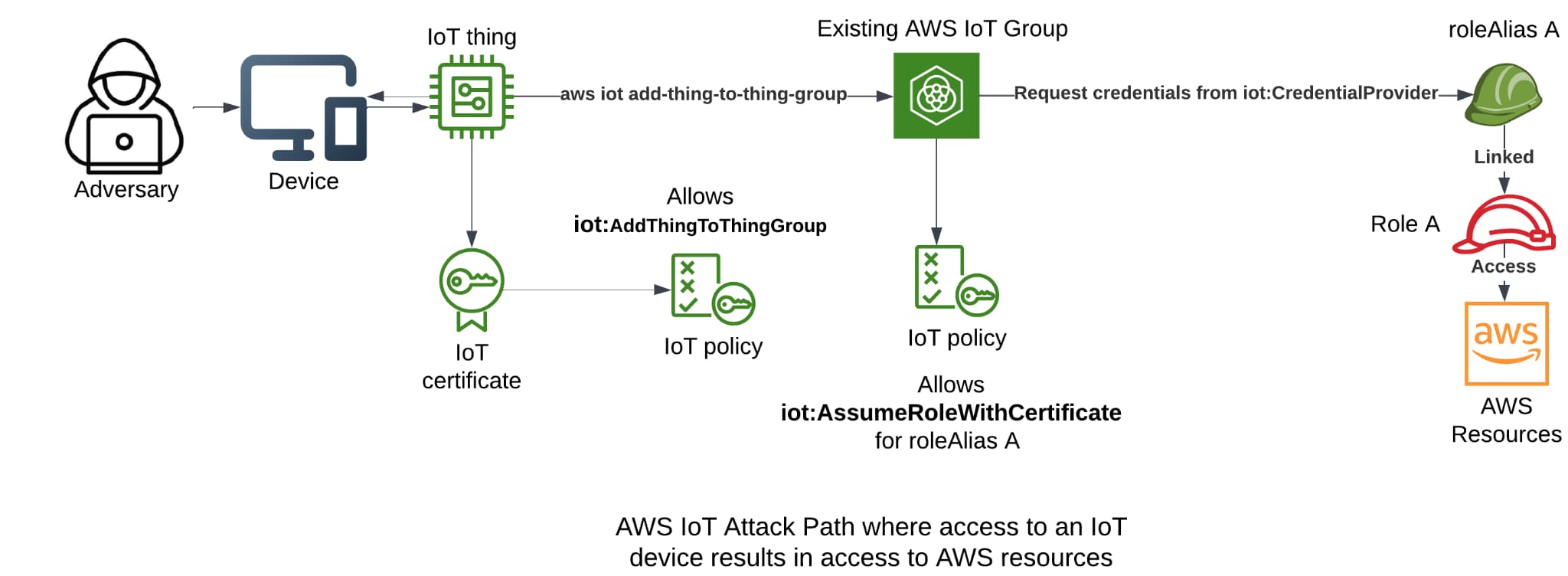

AWS IoT (internet of things) is an interface provided by AWS used to connect, integrate and manage IoT devices in AWS environments. It does so using multiple resources, with things, certificates, policies and role aliases most relevant to this blog post.

Thing

AWS IoT object that represents an IoT device: It can be given attributes, be added to groups, and more. AWS IoT also supports running commands (and viewing command history) on things. Most importantly for this blog, a certificate can be attached to it that will be used for authentication.

Certificate

Authentication material for AWS: The certificate can be signed by a registered or an unregistered CA. A certificate can be used to authenticate multiple things, while each thing can only be associated with one certificate. Certificates can have policies attached to them to provide IoT permissions.

Policy

Permissions policy in the standard AWS JSON structure: Policies can be granted to “targets,” or certificates, in a one-to-many relationship. If a policy allows the iot:AssumeRoleWithCertificate permission on a given roleAlias, whoever authenticates with the certificate can assume the role mapped to the roleAlias allowed by the policy.

RoleAlias

An IoT object mapped to an IAM role allowing the granting of AWS permissions to IoT things (through a chain of thing->certificate->policy->roleAlias->role): The roleAlias was created to allow updating roles for an IoT device without being required to restart it. Creating a roleAlias requires two conditions — for the mapped role to allow “credentials.iot.amazonaws.com” in its trust policy and for the creator to possess the iam:PassRole permission on the role (preventing users from editing existing roleAliases for privilege escalation).

AWS IoT can present a unique initial access vector in scenarios where edge devices in an organization are given certificates used to authenticate to AWS IoT. In cases where the certificate is associated with a policy that allows assumption of a roleAlias, immediate AWS access could be gained. In other cases, combinations of AWS IoT permissions (through an IoT policy) that allow modification of certain IoT resources could be used to gain AWS access. For example, if a policy exists that allows assumption of an existing roleAlias, the ability to attach the policy to the certificate on the device would result in gaining the AWS permissions of the role linked to the roleAlias. Another example is if a thing group exists with an attached policy allowing assumption of a roleAlias, having the permission to add the thing linked to the device certificate could result in gaining the AWS permissions of the role linked to the roleAlias.

Below is an example of a potential attack path:

Cognito

AWS Cognito is an authentication and authorization provider. Its main resources are user pools and identity pools.

User pool is essentially an OIDC IdP, a directory for application authentication and authorization (ish). Some of its features allow sorting users into groups (which can be granted AWS roles), adding app clients (which represent the applications that use the user pool as an IdP), setting sign-up and sign-in flows, and controlling authentication methods.

Identity pool is a directory of federated identities used to grant access to AWS resources. Some of its features allow connecting multiple IdPs and granting both authenticated and guest access.

In this section, we will briefly describe common misconfigurations in the Cognito service that could lead to unintentional initial access vectors and endanger the environment. We will start with issues pertaining to user pools and continue with those pertaining to identity pools.

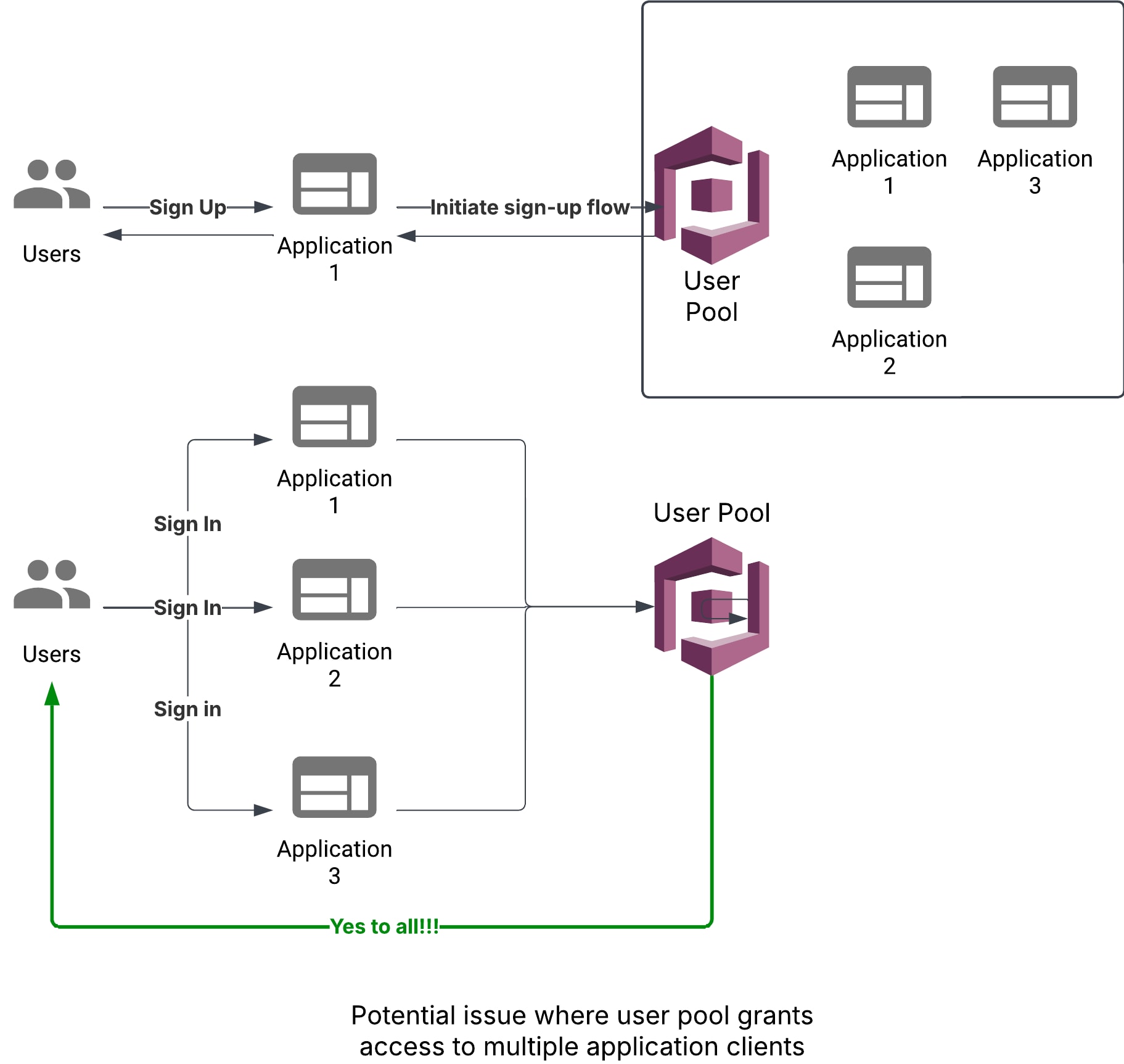

When it comes to using Cognito user pools, there are two factors — the user pool configurations and the application client. User pools handle the heavy lifting with regard to authentication, but authorization must be handled by the application client. Other than authorization within the application client, there’s also a matter of authorization between application clients. Each user pool may be used by multiple application clients.

At this time, though, there’s no way to limit a user’s access to specific applications within a user pool. The access is binary, either you have it or you don’t. While such limitations may be applied by the applications themselves, centralizing authentication while decentralizing authorization could lead to unintentional access granted due to potential management overhead and different implementations led by separate application teams and owners. Lastly, self-registration is enabled by default. As such, anyone could potentially sign up and gain access to all applications within the user pool.

User pools can also be used to grant access to AWS resources via the attachment of roles to groups. However, this requires “manually” adding users to a group, and while risks may arise from using this feature, it's not within the scope of initial access and this blog.

From an identity pool perspective, creating an identity pool with “anonymous” guest access — that is, creating an initial access vector with the impact varying based on the IAM role granted to guests — is the biggest threat. It’s worth noting that the default guest permissions are cognito-identity:GetCredentialsForIdentity, so to gain access, an attacker would first need an identity-ID and auth material. Another issue could be using a user pool with self-sign up enabled as an IdP, potentially allowing anyone to sign up and gain access to AWS resources within the environment.

Learn More

Cortex Cloud can help you identify and remediate these misconfigurations and alert you when they get exploited in real-time. Contact our team for more info.